All contents is arranged from CS224N contents. Please see the details to the CS224N!

1. Named Entity Recognition(NER)

-

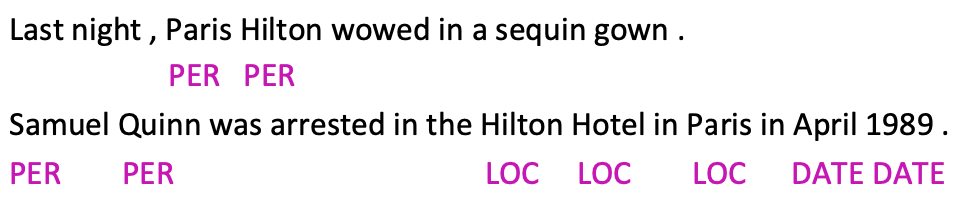

Task: Find and Classify names in text

- Example

Reference. Stanford CS224n, 2021

- Usages

- Tracking mentions of particular entities in documents

- For question answering, answers are usually named entities

- Often followed by Named Entity Linking/Canonicalization into Knowledge Base

-

Simple NER: Window Classification using ninary logistic classifier

-

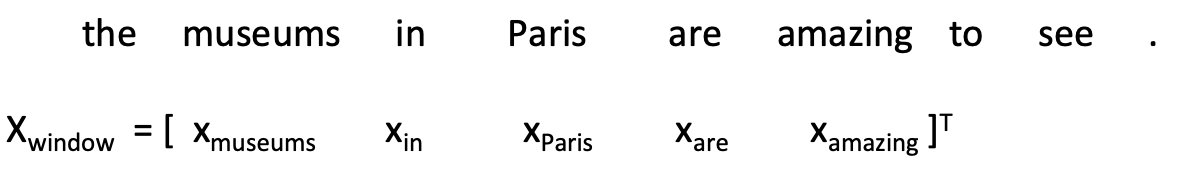

Idea: Classify each word in the context window of neighboring words

-

Train logistic classifier on hand-labeled data to classify center word(yes/no) for each class based on a concatenation of word vectors in a window

-

Example: Classify “Paris” as \(+\) or \(-\) location in context of sentence with window length 2

Reference. Stanford CS224n, 2021

-

Resulting \(x_{window} = x \in R^{5d}\), a column vector

-

To classify all words, run classifier for each class on the vector cnetered on each word in the sentence

-

-

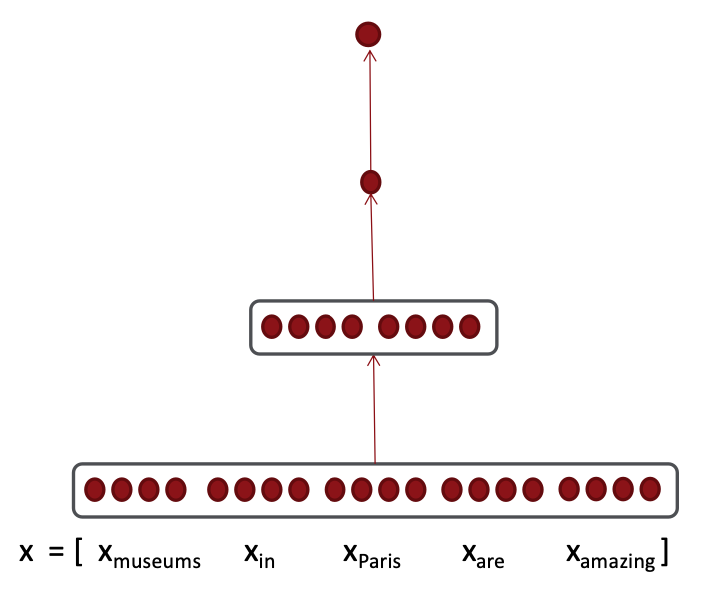

Binary Classification for center word being location

- Model

Reference. Stanford CS224n, 2021

-

Equation

\[s = u^Th, h=f(Wx+b), x(input) \\ J_t(\theta) = \sigma(s)=\dfrac{1}{1+e^{-s}}\]

- Model

2. Stochastic Gradient Descent in Neural Network

$\checkmark$ Mathmatic for Stochastic Gradient Descent in Neural networks

3. Neural Network

$\checkmark$ The Concept of Neural Network and Technique

4. Dependency Parsing

$\checkmark$ Constituency Grammar

-

Two views of linguistic structure: Constituency

= phrase structure grammar = context-free grammars (CFGs) -

Phrase structure organizes words into nested constituent

- Starting unit: words(ex. the, cat, cuddly, by, door)

- Words combine into phrases(ex. the cuddly cat, by the door)

- Phrases can combine into bigger phrases(ex. the cuddly cat by the door)

$\checkmark$ Dependency Parsing